≡

≡

Email Is Far From Dead

Jan 8, 2019 by Mark Maier It is truly the currency of Earned Media. Email has been around long enough that you realize if other mediums went up in smoke tomorrow, Email would still be around. Wishpond was nice enough to take a look at their highest performing A/B tests to show us what is working and what is not in the world of Email Marketing...

It is truly the currency of Earned Media. Email has been around long enough that you realize if other mediums went up in smoke tomorrow, Email would still be around. Wishpond was nice enough to take a look at their highest performing A/B tests to show us what is working and what is not in the world of Email Marketing...

"Since August, what we've learned from these tests has improved our average open rates by 40%.

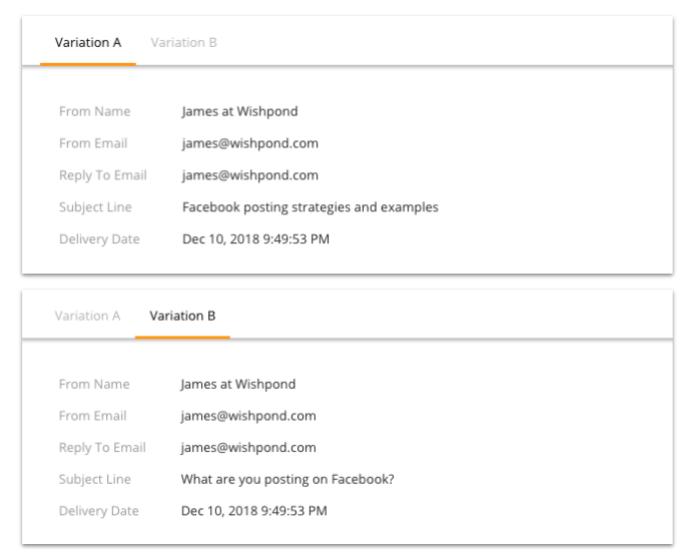

Newsletter A/B Test #1: Question vs Statement

The experts are divided on this subject.

For instance, Dan Zarella analyzed stated clearly that "People are less likely to open emails that include a question mark (?) or a hashtag (#)."

Constant Contact, however, states in no uncertain terms that "Using a question in your subject line is a great way to make a more personal connection with the people viewing your emails."

So… let's check it out.

Test "A" converted 26% better than Test "B" with 100% certainty.

Actionable Takeaway from this Email A/B Test:

Perhaps the question is too commonly-asked. Perhaps the strategies and examples are too desirable. But, for us, questions don't result in higher newsletter open rates.

Your newsletter subscribers want to know what they're going to get when they click on an email. Don't beat around the bush with vague questions.

Want to see the impact of testing on your next newsletter?

Wishpond's email marketing tool makes it super easy to set up A/B tests, as well as automate your campaigns. Click here to learn more and talk to an expert.

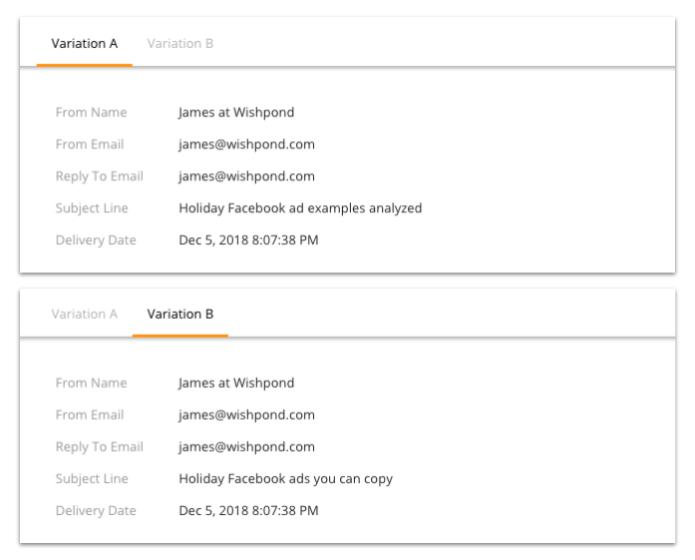

Email A/B Test #2: "Analyzed" vs "You can Copy"

This test sought to determine if our recipients responded better to the idea of marketing strategies/examples/best practices being "analyzed" or if they were more interested in the ability to copy those strategies/examples/best practices.

Here are the newsletter variations:

Test "A" converted 69% better than Test "B" with 100% significance.

Actionable Takeaway from this Newsletter A/B Test:

At the heart of it, this newsletter A/B test asks the question, "Does the modern marketer want to read about data being analyzed, or do they just want something they can take to the bank, today?"

This test, like the best email A/B tests, gives us something to take forward.

People want actionable strategies - strategies they can put on one monitor and put into action on the other.

Because of this test, we've started to move away from thought leadership, and toward actionable, copyable marketing tactics in our content and in our email strategy.

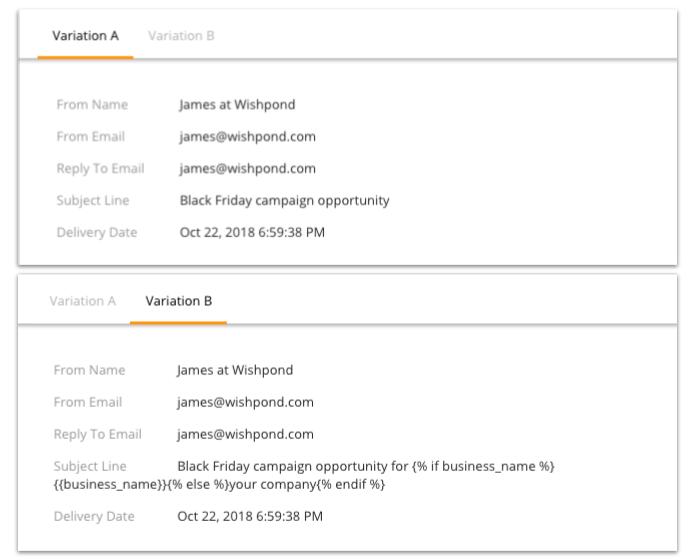

Newsletter A/B Test #3: Inserting company name

This test was only run to about 15% of our newsletter list, as we don't have company name for every one of our subscribers. But, due to the high-impact test we ran, we still found 100% significance.

The test was designed to determine if the addition of a business' company name in the email's subject line would improve open rates.

Here are the two newsletter variations:

Test "B" converted 16% better than Test "A" with 100% significance.

Actionable Takeaway from this Newsletter A/B Test:

This one doesn't surprise me in the least. Every case study makes it clear that personalization increases email open rates.

The problem, of course, is that you don't always have the personal details which make personalization possible.

So the actionable takeaway from this newsletter A/B test is to get those personal details. Ask your leads for whatever information will increase your email's open rates and final conversions. Then use it to do so.

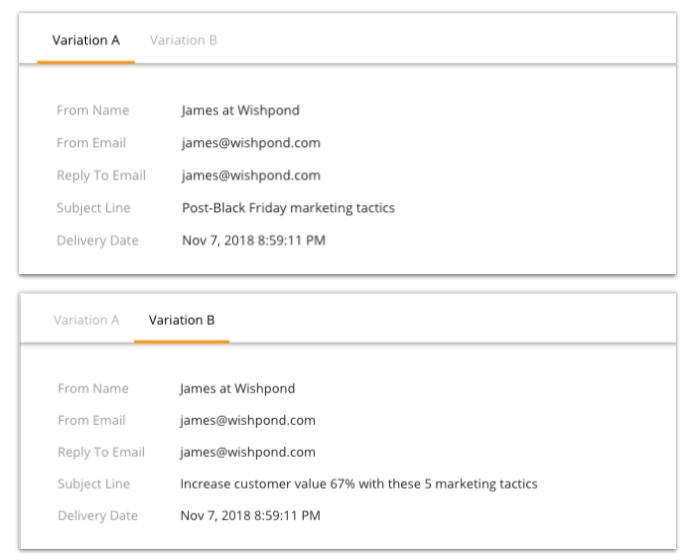

Newsletter A/B Test #4: Relevance vs Specificity

This test, for me, was all about proving to myself that it was better for us to be relevant (using "Black Friday") than it was for us to deliver number-specific value.

As one of our most impactful newsletter A/B tests, the results were loud and clear.

Here are the two newsletter variations:

Test "A" converted 64% better than Test "B" with 100% certainty.

Newsletter A/B Test #5: Simplicity vs Specificity

This test was all about specificity, and I honestly thought it was going to go the other way.

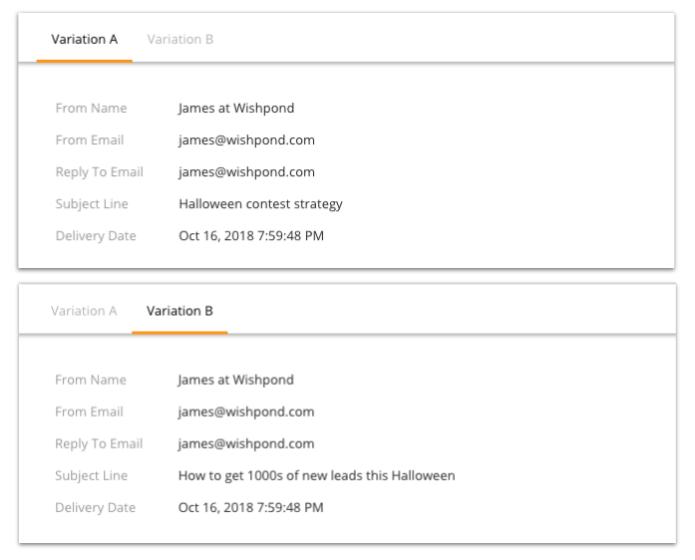

Here are the two newsletter variations:

Test "A" converted 48% better than Test "B" with 100% certainty.

Actionable Takeaway from this Newsletter A/B Test:

This test shows me one thing: If you have a large email list, specificity can hurt.

Are all of our email recipients interested in getting leads from contests? After all, there are many reasons to run a contest, not just lead generation. It's also a term used primarily in the B2B space.

As a result, including that specific goal has done nothing but alienate a portion of our subscribers - resulting in a losing test variation.

So the actionable strategy here is this: Go specific if you know exactly what your entire audience is interested in. Otherwise, err on the side of inclusion.

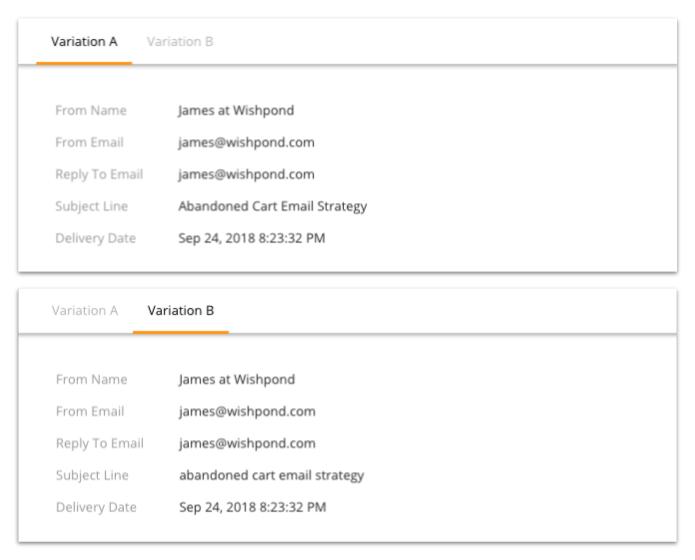

Newsletter A/B Test #6: Capitalization

As a copy editor, testing this hurt me, almost physically.

But our sales team had seen positive results with de-capitalization in their cold email campaigns, so I had no real argument beyond a grammatical one (which doesn't go very far in the face of a bottom line-impacting test).

Here are the variations:

Test "B" converted 80% better than Test "A" with 100% certainty.

Actionable Takeaway from this Newsletter A/B Test:

80% better.

You heard that right. 80.

The hypothesis here is that de-capitalizing your subject lines makes the emails look more personal - like a real person wrote them, forgot to capitalize, and hit "send."

For a possible 80% increase in your newsletter open rates, this one is more than worth testing (if you can bear the grammatical agony).

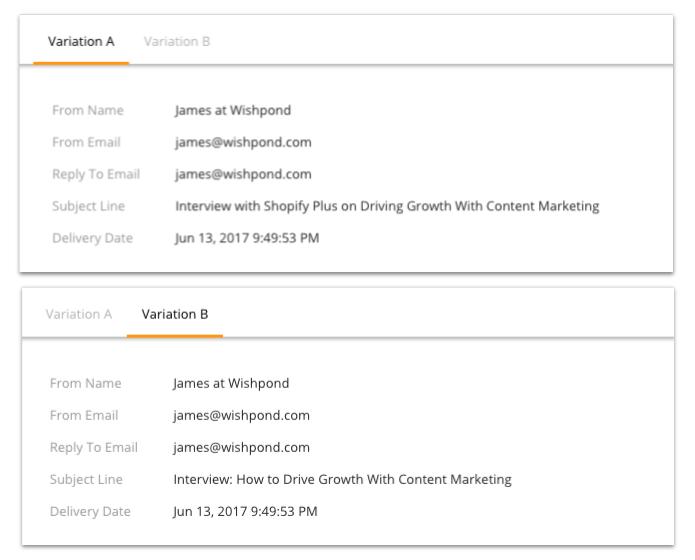

Newsletter A/B Test #7: Brand name vs no brand name

This was actually three tests, run more than a year apart.

The first is "Brand Name vs No Brand Name" - straight up.

That one looks like this:

Test "A" converted 23% better than Test "B" with 100% certainty.

So, now we know that a brand name that our subscribers recognize has an impact on their chance to open an email.

But what about a brand name our subscribers don't recognize?

Will the specificity (the case study element of this article) win, or will an unknown brand name turn people off?

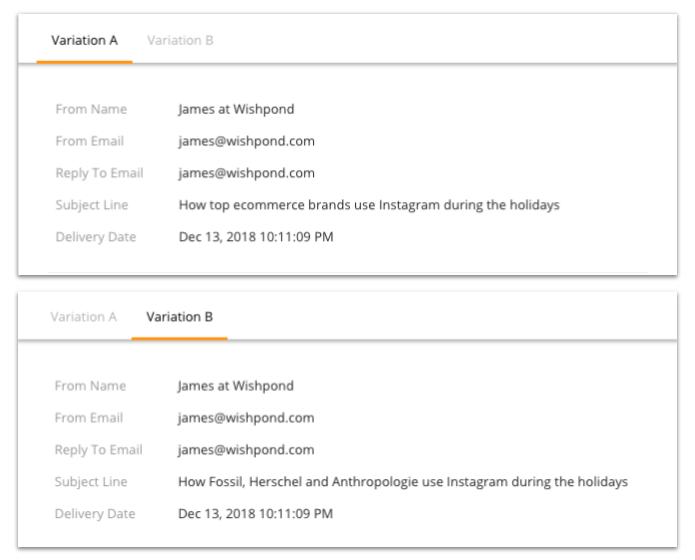

Here's the next test we ran:

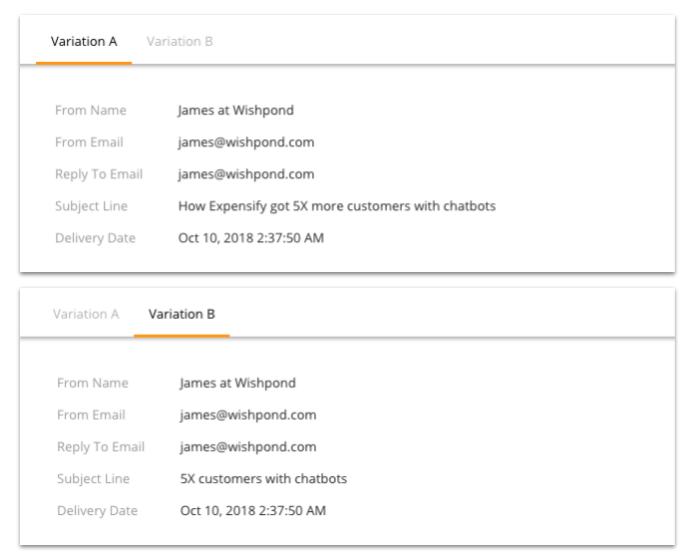

Test "B" converted 34% better than Test "A" with 100% certainty.

I wanted to validate this, as I think it's an incredibly replicatable strategy. So I did.

Here's the third iteration of this A/B test, with a straight brand name introduction into the subject line:

Test "B" converted 4% better than Test "A" with 97% certainty.

So, not quite as impactful, but I do feel validated. Well worth your test, in my book.

Actionable Takeaway from this Newsletter A/B Test:

So what did we see there?

It turns out that a brand name converts better than no brand name, but not if the brand name isn't recognizable.

My hypothesis for this test is that it's, like the "specificity" test above, all about alienation.

If you throw a variable into your newsletter subject lines which has a chance to exclude people who don't want or don't know about that variable (jargon, in particular, is a dangerous thing in email marketing), you shouldn't be surprised if you see a drop in open rates.

However, if you can introduce a variable which everyone has heard of and can relate to (especially if you're talking about how that variable found success), then you work to include your subscribers.